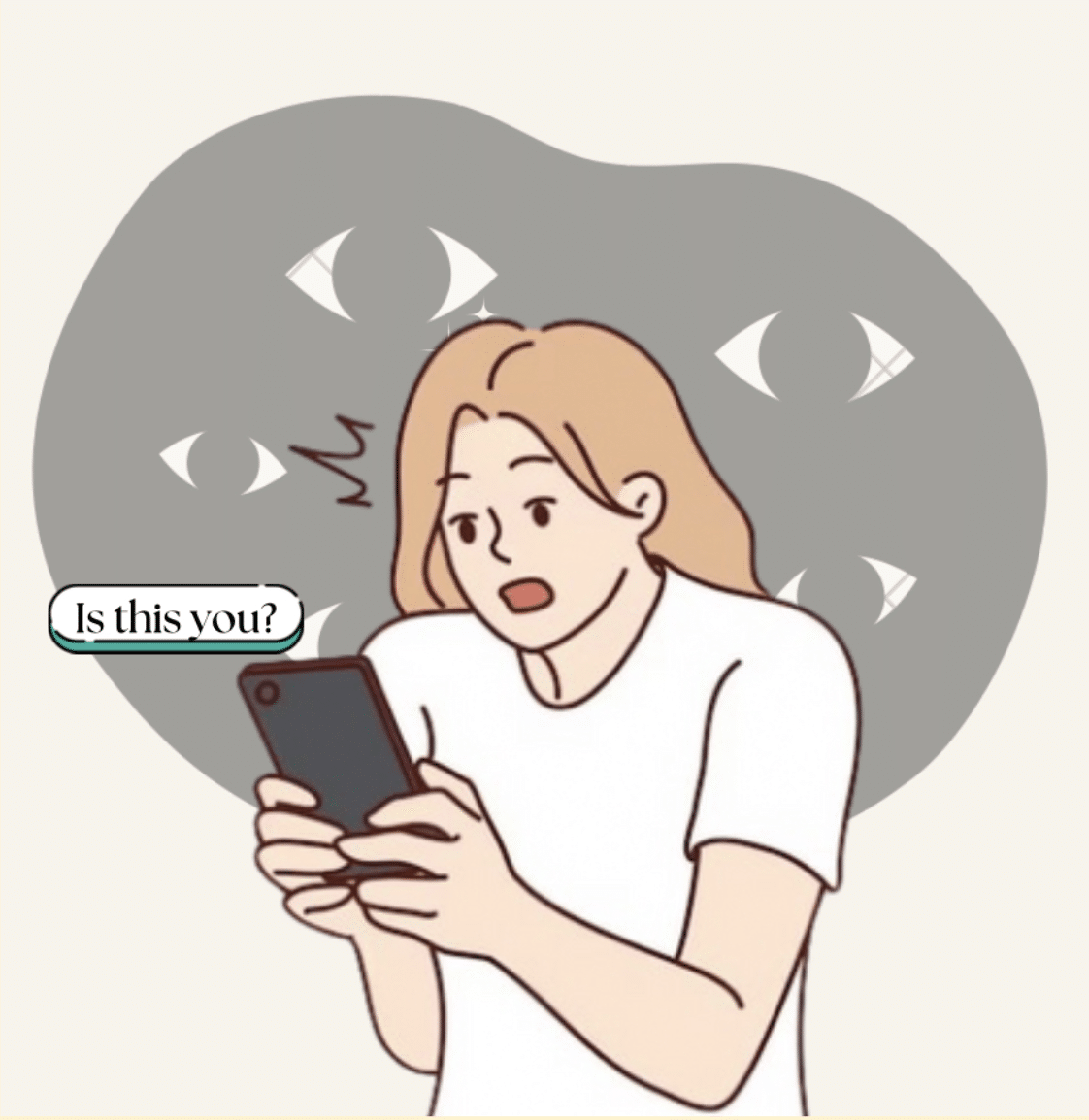

“Is this you?”: How AI Is Being Used to Exploit Teens and What You Can Do About It

Picture this: You are a regular teenage girl. As with most teenage girls, you like to post about yourself on your Instagram. Innocent pictures, like random school pictures, hangouts with your friends, and birthday posts. You also text your friends whenever you’re bored until one day, a text pops up stating the following. “Is this…

Picture this: You are a regular teenage girl. As with most teenage girls, you like to post about yourself on your Instagram. Innocent pictures, like random school pictures, hangouts with your friends, and birthday posts. You also text your friends whenever you’re bored until one day, a text pops up stating the following.

“Is this you?”

You open it, and you find sexually explicit content of yourself. You know you haven’t done anything close to what these videos are, so how come your face is plastered onto these?

“That’s not me.”

Although most people assume that deepfakes/AI videos are for funny TikTok posts, charged political content (which falls under misinformation), or to create art without the needs of an artist, it can also be used to make sexually explicit content of regular people — like yourself. The story outlined at the start of the article isn’t a figment of the imagination. Rather, it is the shared experience of South Korean school girls who have had to deal with years of sexual abuse through chats like Telegram. Chats filled with teenage boys and relatives, unlike the stereotypical “old man online” character attached to sexual abusers. As one PBS article details, the effects of nonconsensual deepfake content have been devastating on these victim’s lives, as one of them tried to kill herself after finding videos of herself in explicit situations (Source).

It doesn’t stop in South Korea either. As one Thorn article states, it is estimated that 1 in 10 minors reported that they knew of cases of peers creating nudes of other kids. It is a widespread epidemic, and although many victims suffer mental illnesses such as depression, anxiety, and deep isolation afterward, there is little to no push on legislation involving nonconsensual deepfake content. However, there are some tips that you can follow yourself, as provided by the National Sexual Violence Resource Center.

Navigating Nonconsensual AI Imagery

Let’s be real about deepfakes.

Nicole F.

2024-2025 Youth Innovation Council Member

Need to talk?

Text NOFILTR to 741741 for immediate assistance.